Overview

Fume Engine represents a long-term project aimed at exploring and comprehending every aspect of a game engine. This project intends to demonstrate aptitude and skillset in implementing these areas by applying software best practices, design patterns, and memory management, utilizing modern C++20.

Current Features

Right now, Fume Engine is at most basic development stage and these are the following implemented features:

-

✔Windows script for project generation using Premake with MSBuild generator. -

✔Linux script for project generation using CMake with Ninja/Make generators. -

✔Flexible Engine building and usage as static or shared library. -

✔Custom Cross-platform API-agnostic Window, Input & KeyCodes. -

✔Custom Cross-platform API-agnostic Renderer. -

✔3D Batch Rendering. -

✔Compile-time Entity Component System. -

✔Runtime Camera with Perspective and Orthographic projections. -

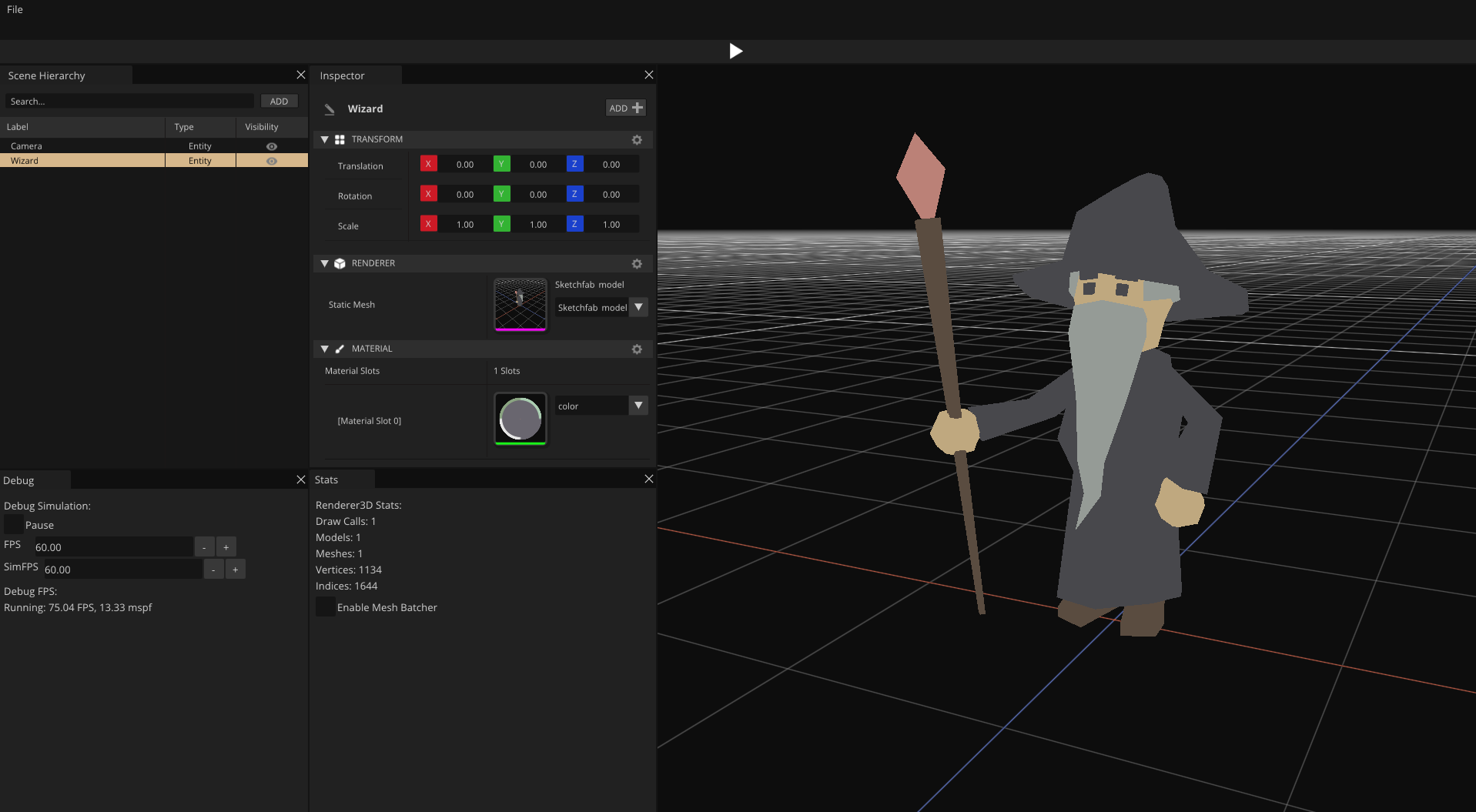

✔Editor Camera Controller. -

✔Editor UI Panels: Scene Hierarchy, Properties Inspector and Scene Viewport. -

✔Scene Controller & Play Modes (Editor / Runtime).

Dependencies

The project uses the following dependencies as submodules:

-

GLFW: for window creation and user input management for Windows, Linux, and MacOS. -

GLAD: loader for OpenGL 4.6 used as the main graphics API. -

STB: for loading and manipulating image files for textures. -

ImGui: for GUI components and interaction. -

Assimp: for managing and loading asset 2D-3D models.

The project also uses the following third-party dependencies as build system automation tools:

-

Premake5: build system for MSVC compiler and Visual Studio project generation. -

CMAKE: automation tool to use Ninja / Mingw build systems for gcc/g++ compilers.

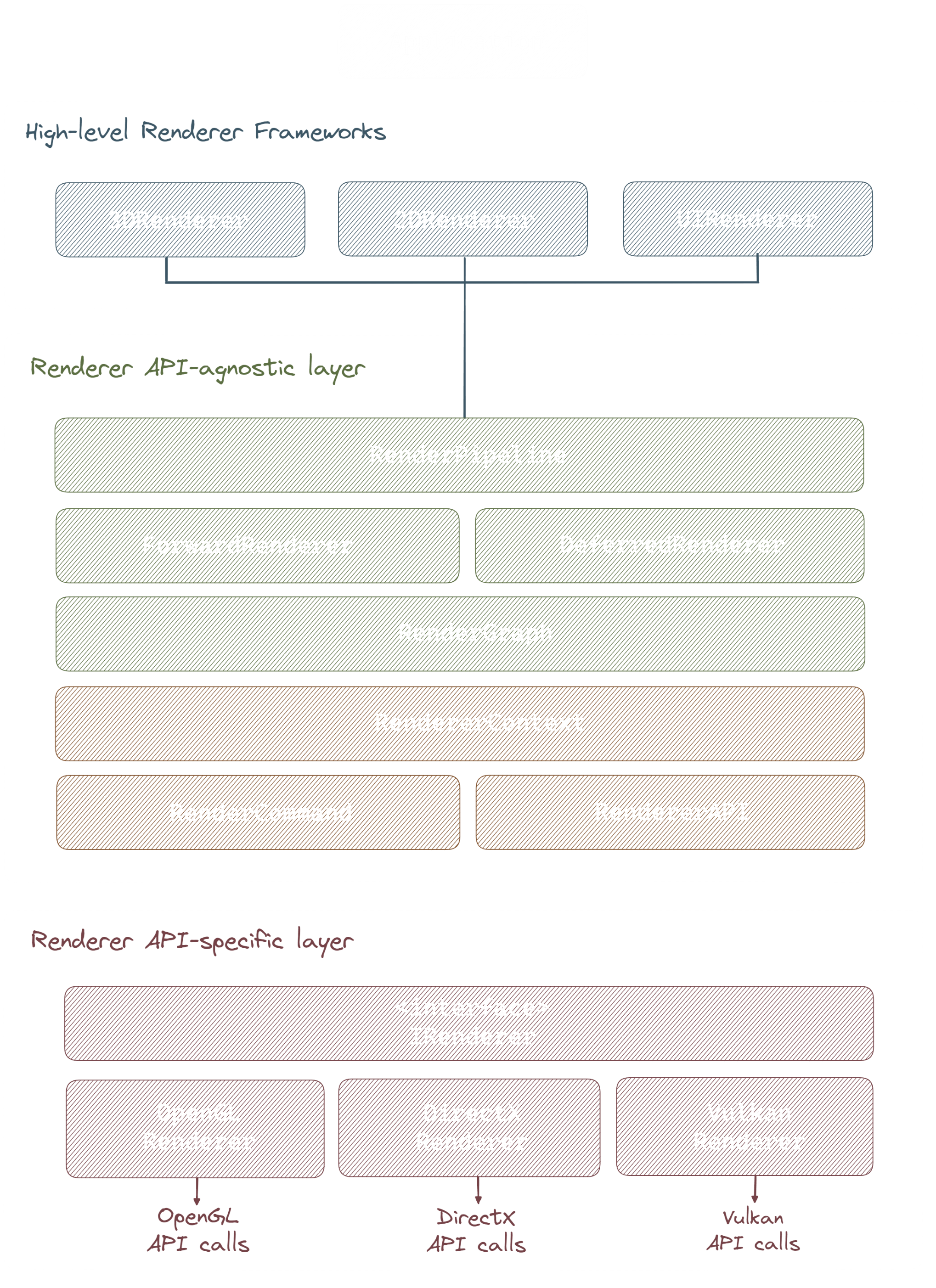

Rendering Architecture

The most developed and continuously improved core system of the project is likely the rendering architecture of the game engine. This section aims to describe how it has been designed from conception in order to implement an agnostic cross-platform API rendering system.

High-level Renderer Frameworks

The rendering structure of my engine relies on several frameworks (Renderer3D, Renderer2D, RendererUI), each acting as a wrapper for static methods that abstract the rendering process within a given scene.

-

At the beginning of each frame,

BeginFrame()is invoked with parameters representing the scene's current setup, such as camera transformations, lighting conditions, and environmental mapping. -

Subsequently,

SubmitObject()calls are made to add each object in the scene to a list for rendering. -

Finally,

EndFrame()is executed to process the rendering logic, which typically involves iterating through the list of object creating commands with their internal object data and send it to the command queue.

Later, in the RendererContext, each command interfaces with the renderer's API calls, which handle the low-level details of the rendering process. The API calls are abstracted by the renderer to provide a high-level interface for the renderer engine.

Cross-platform Abstraction Layer

Beneath the rendering frameworks, the RenderPipeline class is used as a compositional object responsible for storing the various types of renderers that can be generated. Currently, we have the Forward Renderer, but the deferred renderer is also considered for future implementations.

Additionally, this class manages the iteration through the available cameras in the scene to execute the type of renderer each camera refers to, preparing for the frame creation.

Each renderer is tasked with scheduling and executing various types of render passes, such as the Geometry Pass, SkyboxPass, TransparentPass, ScreenPass, etc., in the order deemed appropriate.

To manage this, it utilizes the RenderGraph class, which is responsible for queuing and scheduling the passes for execution and generating the necessary resources to achieve the desired final effect.

Each pass is assigned a list of command buffers to store each action / resource in packets and submit them to the RenderContext.

Lastly, RendererAPI holds a static unique_ptr to the instance of the renderer backend.

This instance is a pointer to the IRenderer interface, so the RendererAPI does not know which type of backend the renderer module is using.

The IRenderer interface represents an initial layer of abstraction containing a set of pure functions that would represent the API-specific implementation calls.

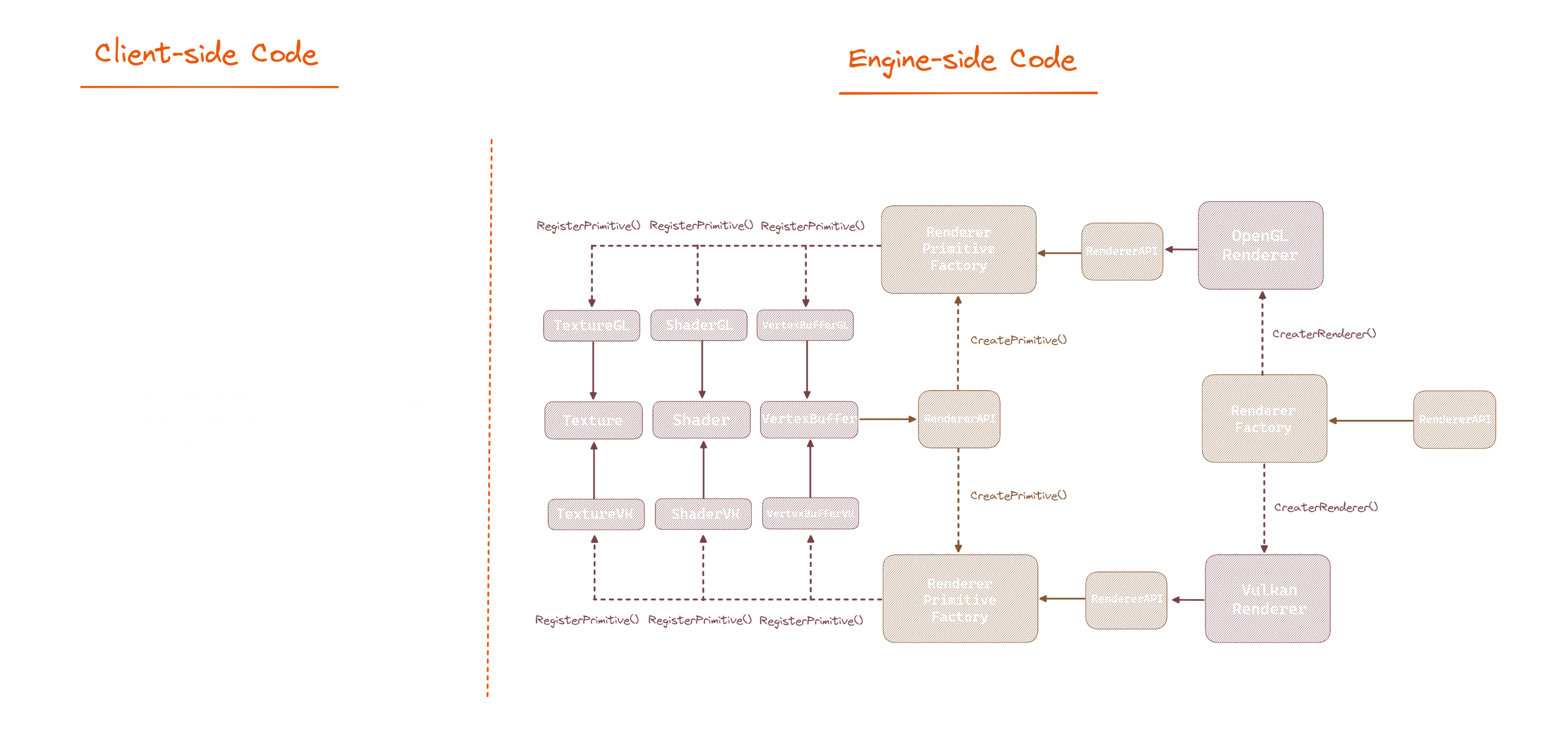

Extensible Renderer Factory

RendererAPI utilizes a set of extensible factories underneath, one to register and generate the renderer backends and another to register all types of renderer primitives available for the generated renderer backend for their subsequent creation.

We're talking about concepts abstracted to objects such as:

- VertexBuffer

- IndexBuffer

- Texture

- Shader

- Framebuffer

- Pipeline

- etc...

Each of these render primitives have their own platform/API-specific implementation that inherits from an interface.

Each implementation contains a static Create() method, the memory address of which is stored in a map within the primitive renderer factory, along with a key that identifies the type of primitive. Thus, we only need to call the factory with the desired primitive type to generate that particular primitive, regardless of the instantiated backend.

We centralize the creation of renderer constructs within a factory, maintaining a code structure that's easy to extend and expand. This approach anticipates future needs, such as the addition of a new renderer backend or specific primitive.

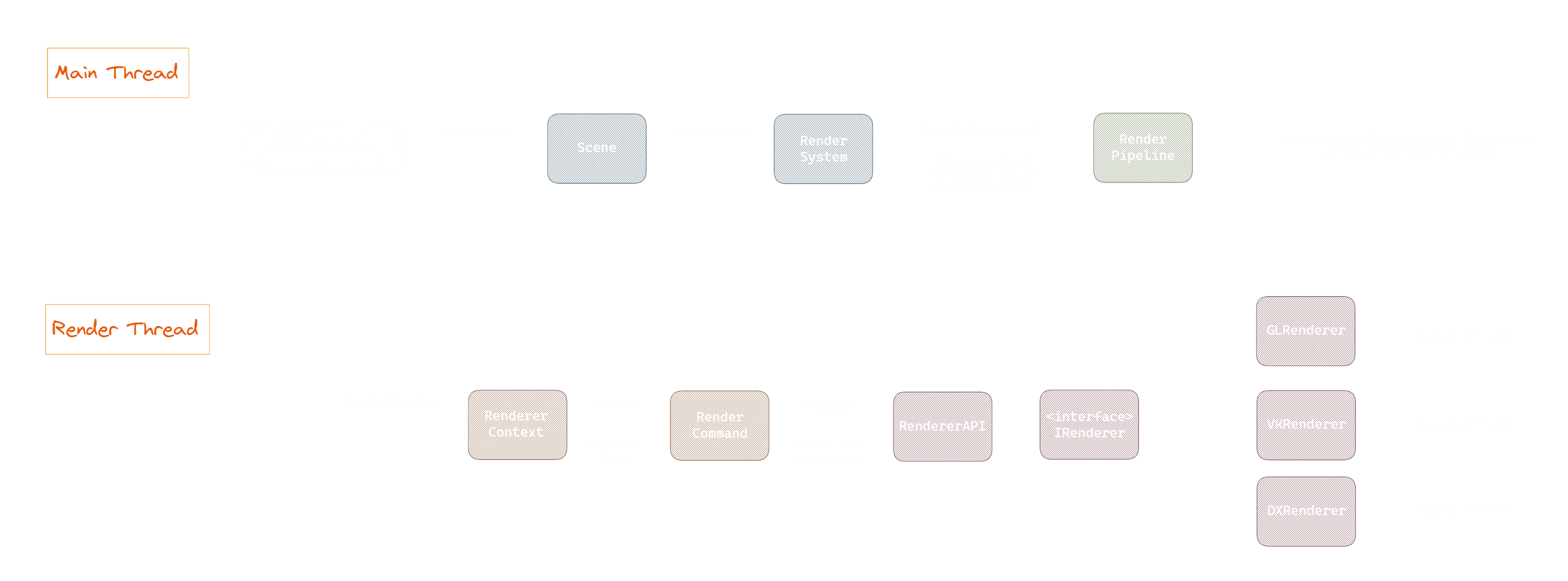

Rendering Flow

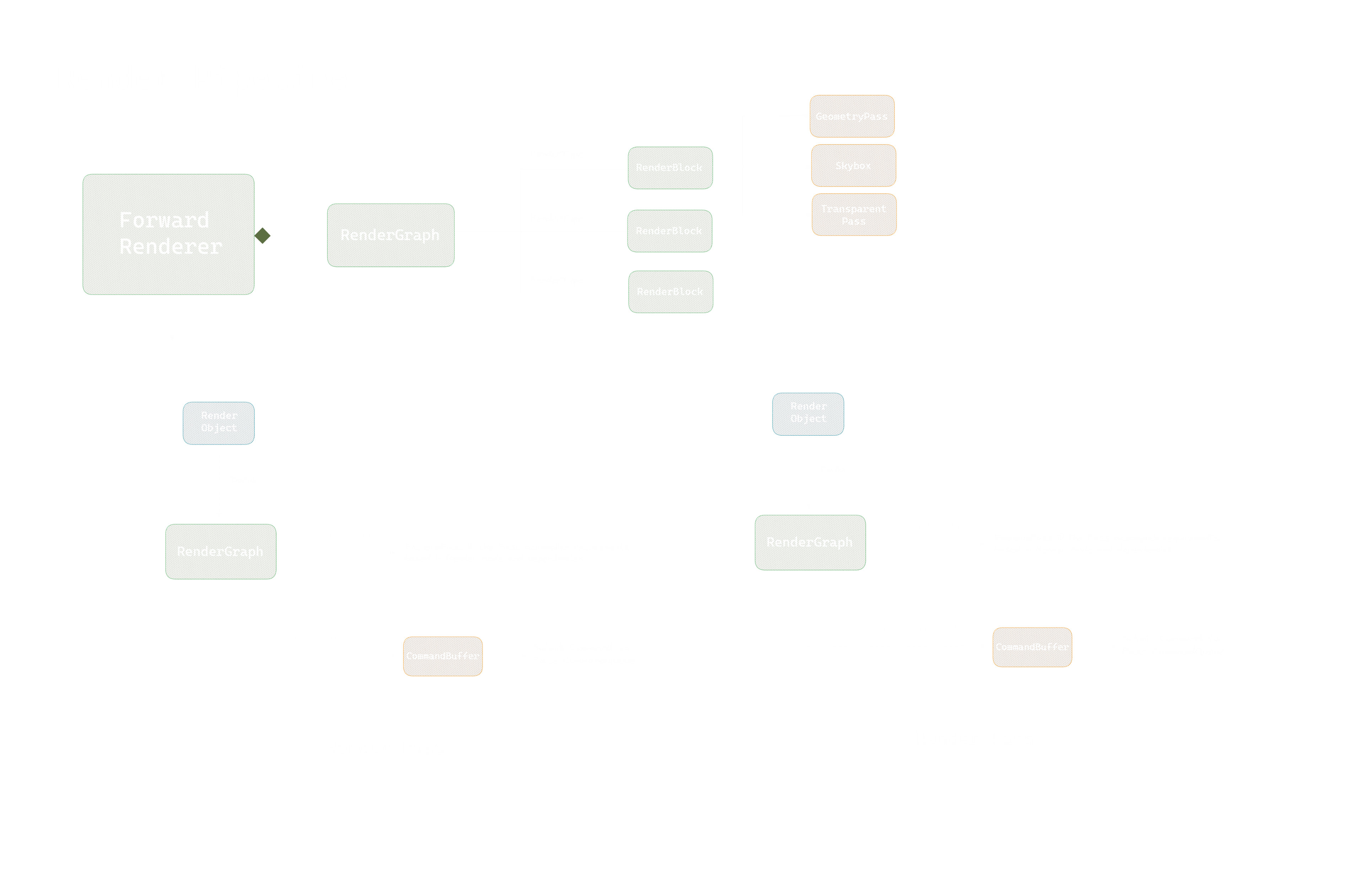

In the next UML diagram from below, you can see how the rendering flow in the engine. In the Main Thread, the RenderPipeline retrieves the RenderSystem components in each scene update to transform them into render objects and organize them into render queues.

Rendering Flow UML Design

These queues will be used by the render passes to generate the command buffers. Once the frame execution is completed, all commands from each render pass are sent to the RenderContext.

This is ideal as we have all the information for that frame ready to be sent to the GPU from a different thread, where the RenderCommand will process each command and utilize the RendererAPI to execute the appropriate API calls based on used graphics API.

Currently, the command buffer is not fully implemented. As a result, it works as a layer above the RendererAPI to call the API call methods abstracted by IRenderer.

3D Rendering Pipeline

One of the earliest features I wanted to implement was the ability to render 3D models on the screen. Thus, from the very inception of the engine's development, I focused on creating a straightforward rendering system.

This initial effort eventually evolved into a high-level 3D framework named 3D Renderer. Serving as a wrapper for 3D models, this framework simplifies the rendering process with three easy-to-use static method calls. These methods can be employed anywhere in the client code to render 3D objects.

This wrapper internally communicates with the RenderPipeline class, where the only renderer currently implemented in my engine is the Forward Renderer.

Initially, the Forward Renderer was designed to manage the render passes that could be added to implement various rendering techniques, among other things. However, considering that this could become overwhelming in the future due to dependencies and requirements among passes, light calculations, etc, I decided to implement a class to manage this issues. The RenderGraph class.

The RenderGraph class is responsible for registering the selected render passes and categorizing them into different RenderBlocks based on each RenderPass' rendering event. It also manages the type of render object each RenderPass receives, depending on the RenderType of that pass, which can be Opaque, Transparent, Overlay, etc. Additionally, the RenderGraph class schedules and sorts these passes based on any existing dependencies between them.

Once the RenderGraph is set up or "compiled," it takes on the responsibility of executing each render pass. Each render pass receives a reference to a command list from the RenderGraph, which it is filled with command buffers during its execution.

At the end of executing all queued passes, the RenderPipeline forwards all command lists from the RenderGraph to the RenderContext. These are then evaluated in the Render Thread using the specific implementation details of the graphics API in use.

Rendering Pipeline UML Design

3D Performance Optimizations

One of the topics that fascinates me the most within a game engine is performance optimization and efficiency improvement. Therefore, this section will aim to describe the various optimization techniques that have been implemented in my game engine.

3D Batch Rendering

Batch rendering is a rendering optimization which basically involves reducing the number of draw calls we can make per frame.

In my approach, I decided to implement a mesh-based batcher, in which each unique mesh maintains a batch configuration. This configuration accumulates indices from the new vertices being added to the final data buffer, allowing for a singular draw call.

-

Vertex Position: In order to achieve this, it was necessary to transform each vertex into world coordinates and pass it into the buffer. This is due to the physical impossibility of executing the vertex shader individually per vertex, as the entire process is done in one pass. -

Textures: On the other hand, to correctly bind the textures, the shader stores an array of up to 32 samplers per mesh. This is because each mesh can have a variety of different materials with different textures. Therefore, we associate each vertex with an index that represents the sampler of the texture that will be bound. This allows us to obtain the corresponding texel for that vertex in the pixel shader to render the final pixel on the screen.

To achieve this technique, what we do is that each time we submit a new model to the renderer, we obtain its mesh and iterate over each vertex.

Next, we populate the MeshVertexBuffer pointer, which stores the relevant data of each vertex, including its transformed position to world coordinates and its corresponding texture index.

void RenderCommand::PopulateVertexBuffer(const DrawableItem& aItem)

{

auto& Meshes {aItem.Mesh.Mesh->GetMeshes()};

const auto& Materials {aItem.Materials};

std::uint32_t SubMeshIdx {0U};

for (auto& SubMesh : Meshes)

{

std::uint32_t VertexIdx {0U};

for (auto& Vertex : SubMesh.Vertices)

{

const auto& Position = Vertex.Position;

const auto& TexCoord = Vertex.TexCoord;

const auto& Normal = Vertex.Normal;

const auto& SubMeshTransform {SubMesh.Transform};

const auto& WorldPos { Transform

* SubMeshTransform

* Math::Vec4{Position.x, Position.y, Position.z, 1.f}};

MeshBatch.MeshVertexBufferPtr->Position = {WorldPos.x, WorldPos.y, WorldPos.z};

MeshBatch.MeshVertexBufferPtr->Position = WorldPos;

MeshBatch.MeshVertexBufferPtr->TexCoord = TexCoord;

MeshBatch.MeshVertexBufferPtr->Normal = Normal;

MeshBatch.MeshVertexBufferPtr->Color = Color;

MeshBatch.MeshVertexBufferPtr->TexIndex = textureIndex;

MeshBatch.MeshVertexBufferPtr++;

}

MeshBatch.MeshIndexCount += MeshBatch.NumIndices;

}

}

Thus, the batch rendering is basically this:

-

first of all we check if the model has an

Index Countto confirm there is vertex data inside the batch vertex buffer. - Once we know there is, we do a substraction between end point memory direction minus start point memory direction to get the total amount of vertices data size we have.

- After that, we upload the vertex data with the size dynamically using the vertex buffer of the mesh and bind each one of the stored textures in the batch. (corresponding to the unique mesh model we are rendering).

-

Finally, the strategy communicates with the render command to submit the API call to the

Command bufferin order to draw every entity that is using the mesh at once.

RenderCommand::DrawBatch(const auto& API, const auto& DrawableItem)

{

auto& MeshBatch = DrawableItem.Mesh->GetBatchData();

if (MeshBatch.MeshIndexCount)

{

// Upload vertex data

std::uint32_t dataSize {static_cast(reinterpret_cast(MeshBatch.MeshVertexBufferPtr)

- reinterpret_cast(MeshBatch.MeshVertexBufferBase))};

MeshBatch.VB->SetData(MeshBatch.MeshVertexBufferBase, dataSize);

// Bind textures

for (uint32_t i = 0; i < MeshBatch.TextureSlotIndex; i++)

MeshBatch.TextureSlots[i]->Bind(i);

// Submit API call to command buffer

RenderCommand::DrawIndexed(API, MeshBatch.IB->GetCount());

}

}

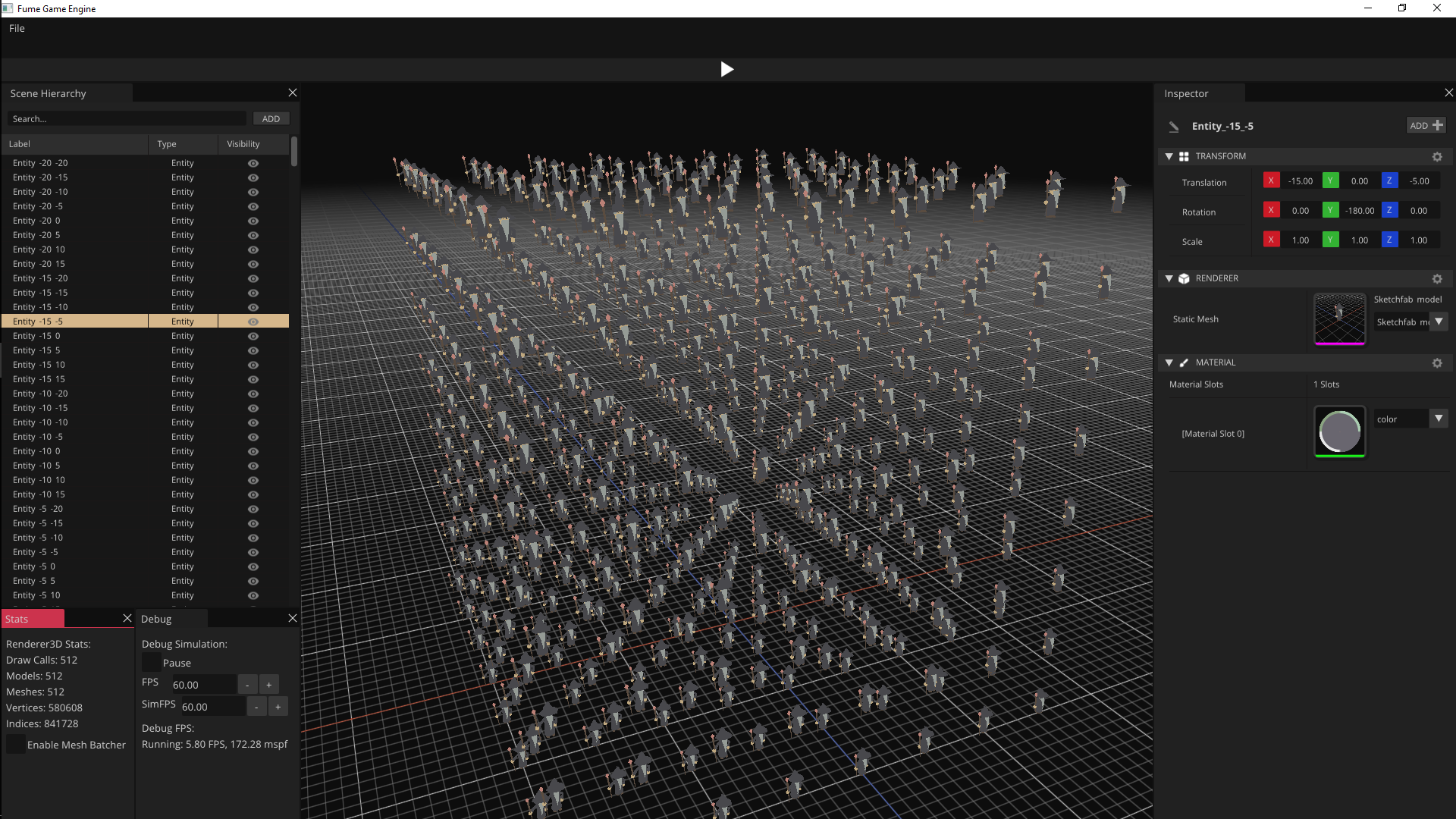

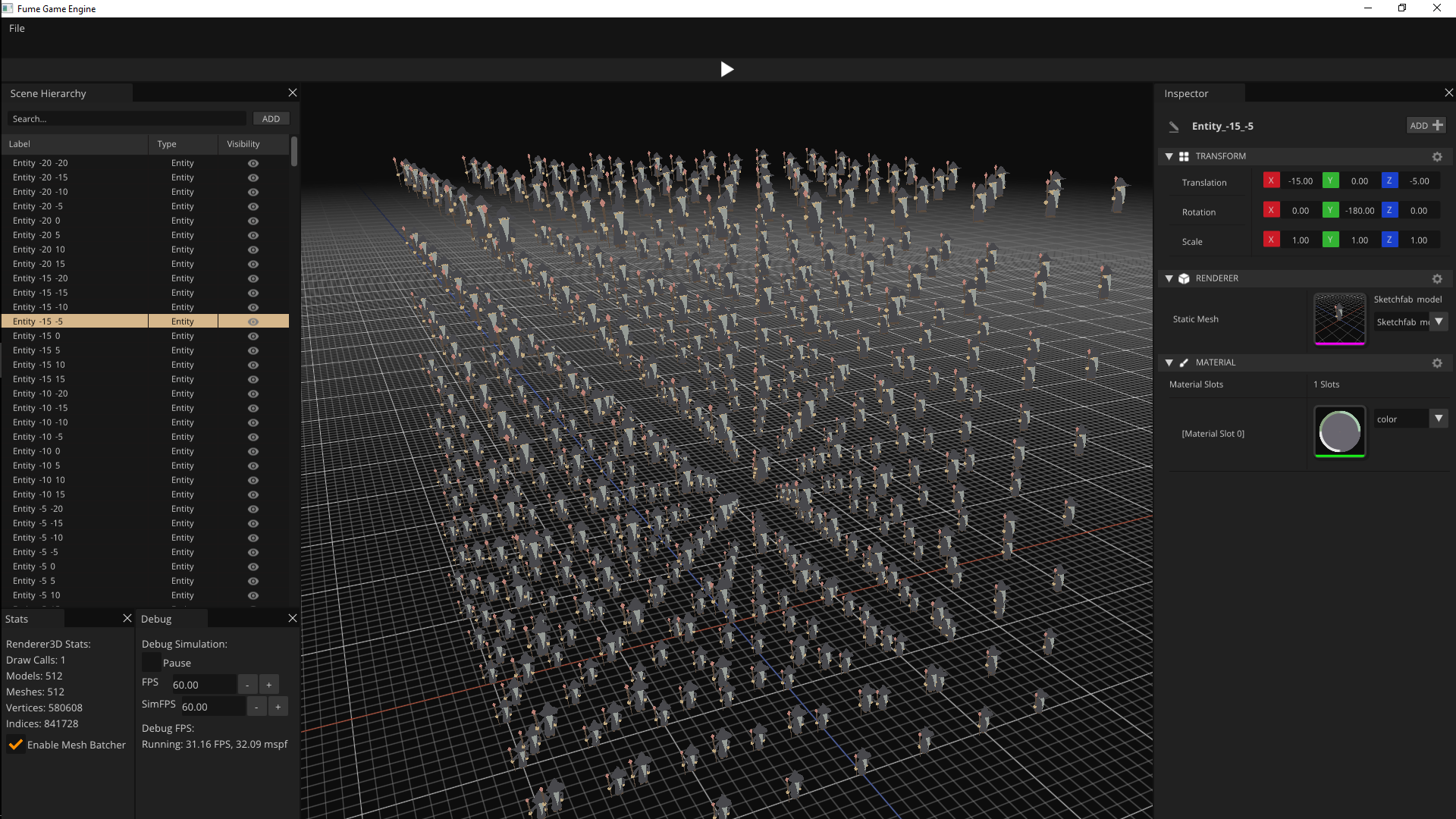

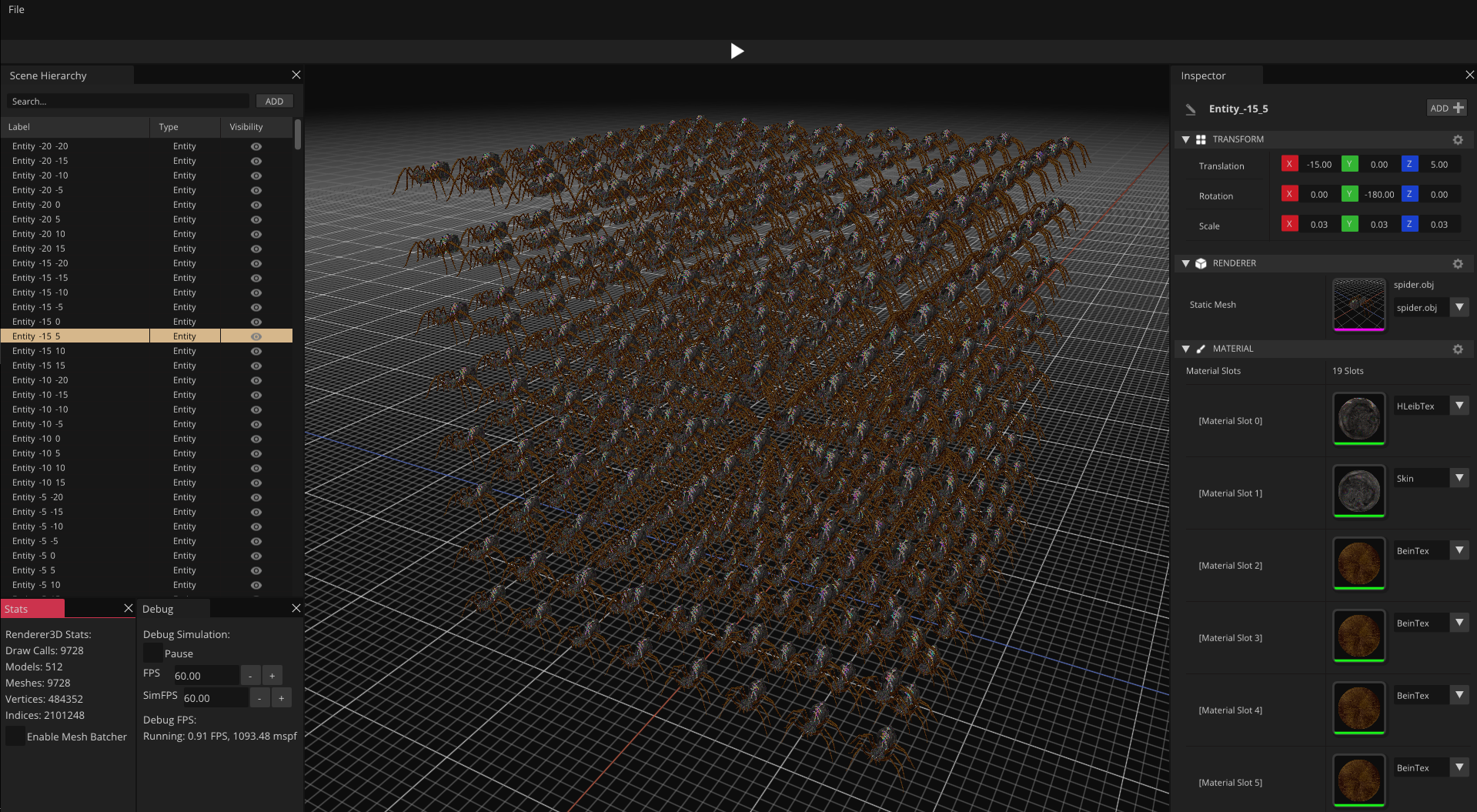

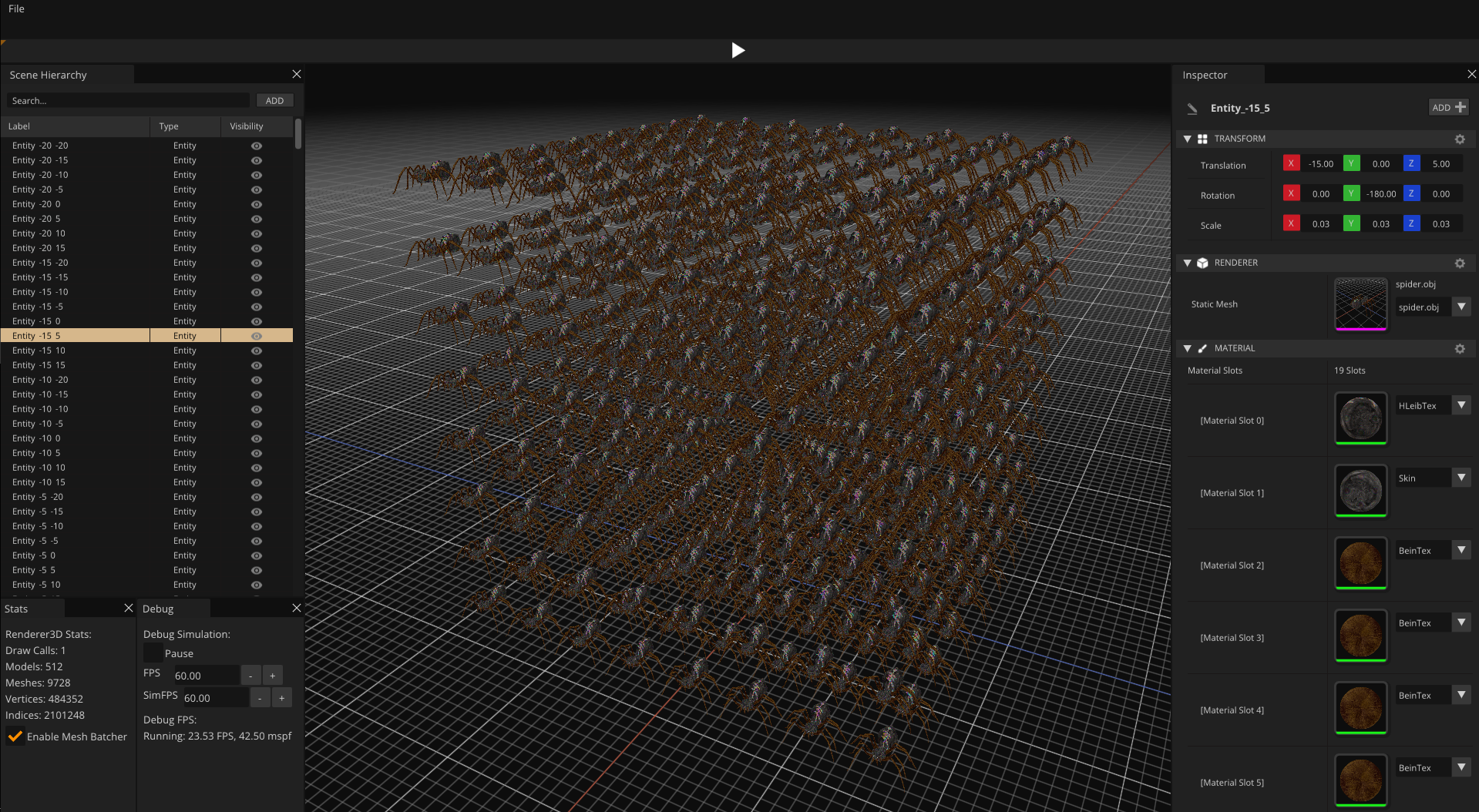

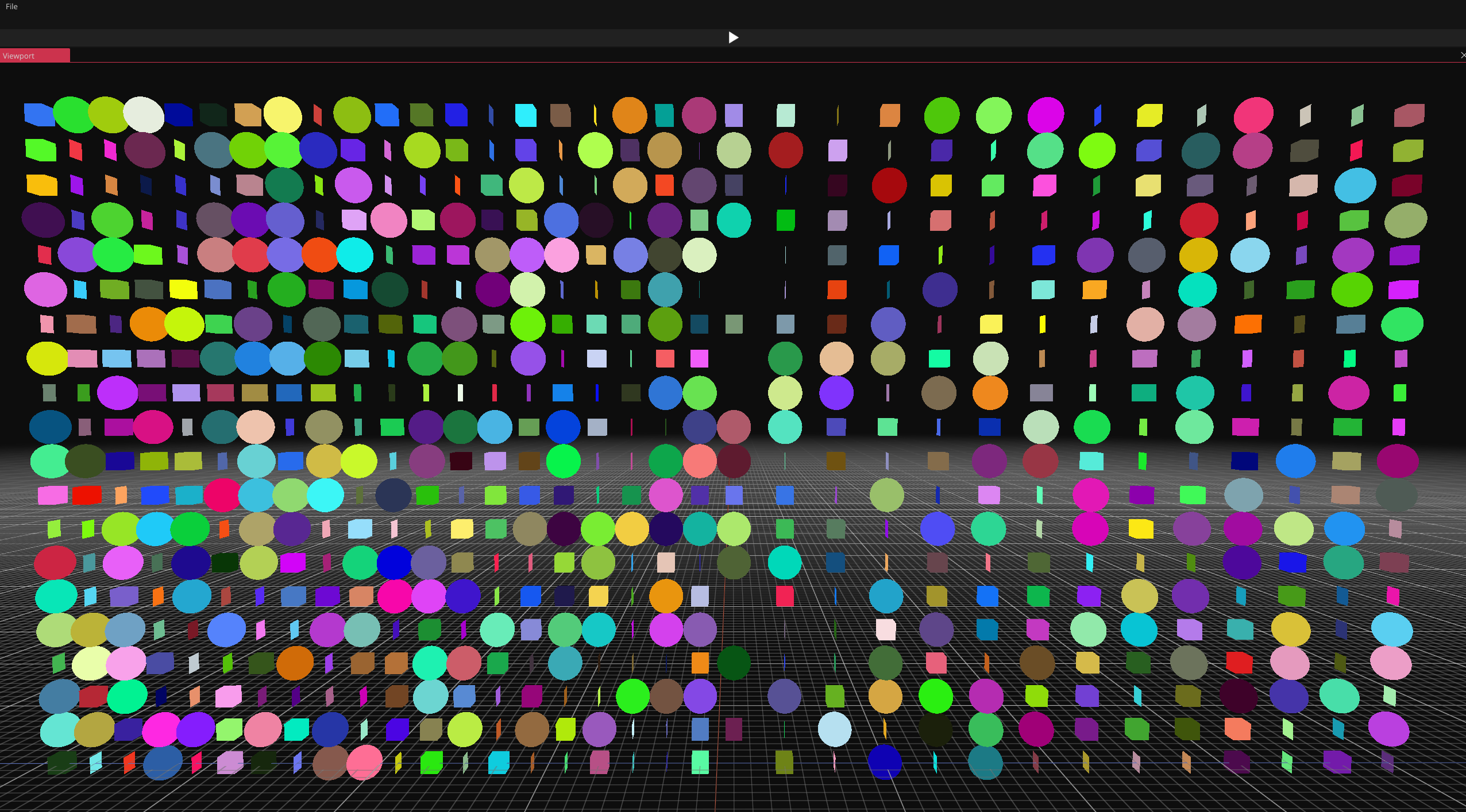

Stress Performance Optimization

TEST 1:

Stressing the engine with 512 draw calls with 500K vertices in a 75Hz monitor.

TEST 2:

Stressing the engine with 10K draw calls with 500K vertices in a 75Hz monitor.

Notice that those performace tests have been made in a single thread (Not Render thread implemented yet!)

Entity Component System (ECS)

Another relevant aspect in a game engine is the Entity Component System (ECS). It transitions from having 3D models in a scene to having entities that segregate their data into various types of components.

Initially, I considered incorporating the header-only library EnTT into my engine, as it is highly recommended for implementing an ECS (Entity Component System). However, after several days of reviewing its management of component usage and catching up on articles and videos about resource management and cache efficiency in relation to ECS, I decided to implement my own Entity Component System.

Cache-friendly ECS Approach

The methodology employed is largely inspired by the functionality of EnTT, particularly the fundamental concept of grouping components of the same type into containers, each with a reference to the ID of the entity they belong to.

In such a way, the entity manager simply receives the components we want to retrieve by typenames and returns only the entities that contain these components as typenames. Subsequently, we perform the relevant behavior for each entity by obtaining those components and manipulating their data.

Components are designed to serve as data containers, and as such, they are aggregate structures devoid of functionalities. Operations and behaviors are managed by systems which iterate over a group of entities that meet the system's component requirements. For instance, the physics system only requires the transform component to manipulate an entity's position and the physics component to read the entity's velocity and apply calculations to update the new position values.

Through this decoupling mechanism between components and entities, we ensure that systems only execute relevant behavior logic by iterating through types of components, rather than entities. This approach enhances cache efficiency and reduces cache misses, avoiding the need to jump to RAM since all read and write operations are applied into the required componentes of the system.

Component data are stored contiguously since the components themselves are aggregate structures and are kept in static arrays. This contiguous storage in memory allows for quick access and minimizes cache misses.

Compile-time Configuration

The Entity Manager is generated at Compile-time as it is based on a template that specializes in lists of component typenames. These lists are generated through metaprogramming, allowing the Entity Manager to register and create the different containers that will store each type of component during the compilation time.

We have a header that allow the user to create an alias for the types of components. This mechanism provides a costumization of the entity manager according to the needs of the application.

namespace ECS

{

using ComponentList = FUME::MP::TypeList

<

FUME::TagComponent,

FUME::TransformComponent,

FUME::RendererComponent,

FUME::CameraComponent,

GAME::InputComponent,

GAME::PhysicsComponent,

GAME::AIComponent

>;

using EntityManager = FUME::EntityManager< ComponentList >;

using Entity = EntityManager::Entity;

}

It is implemented as simple and straightforward way for creating entities that are registered in the entity manager. This is later used to populate static containers of component types that we assign with their relevant data using modern C++20 features.

This includes constructions of Aggregate Structures and the use of Designed Initializers in the assignment of each structure member.

auto& e = EM.CreateEntity();

EM.AddComponent< TagComponent >(e, TagComponent{"Cube"});

EM.AddComponent< RendererComponent >(e, RendererComponent{.Mesh=CubeMesh, .Materials=CubeMesh->GetMaterials()});

EM.AddComponent< TransformComponent >(e, TransformComponent

{

.Position = {0.f, 0.f, 10.f},

.Rotation = {0.f, 0.f, 0.f},

.Scale = {1.0f}

});

As the entity manager can be customized according to the needs of my application, both the data structures that form the components and the systems that manage and manipulate this data efficiently are also scriptable constructs.

These are designed to be treated as custom scripts by the user, allowing them to create the type of component they want and manage its functionality in any system they choose. That's why I call them scriptable custom systems.

In this block of code, we can see how straightforward it is to set up a custom scriptable system. This system queries for the entities it needs based on the components it requires them to have. Then, during the update, it processes the query by executing a lambda object containing the desired behavior for the retrieved entities.

using REQUIRED_CMP = FUME::MP::TypeList< GAME::PhysicsComponent, FUME::TransformComponent >;

void OnStart(ECS::EntityManager& EM)

{

RetrievedGroup = EM.Query< REQUIRED_CMP >();

}

void OnUpdate(ECS::EntityManager& EM, double const dt)

{

const auto ExecuteSystemLogic = [&EM, dt]

(ECS::Entity& e, GAME::PhysicsComponent& Phy, FUME::TransformComponent& Trs)

{

Trs.Position += Phy.Velocity * dt;

}

EM.ProcessQuery< REQUIRED_CMP >(RetrievedGroup, ExecuteSystemLogic);

}

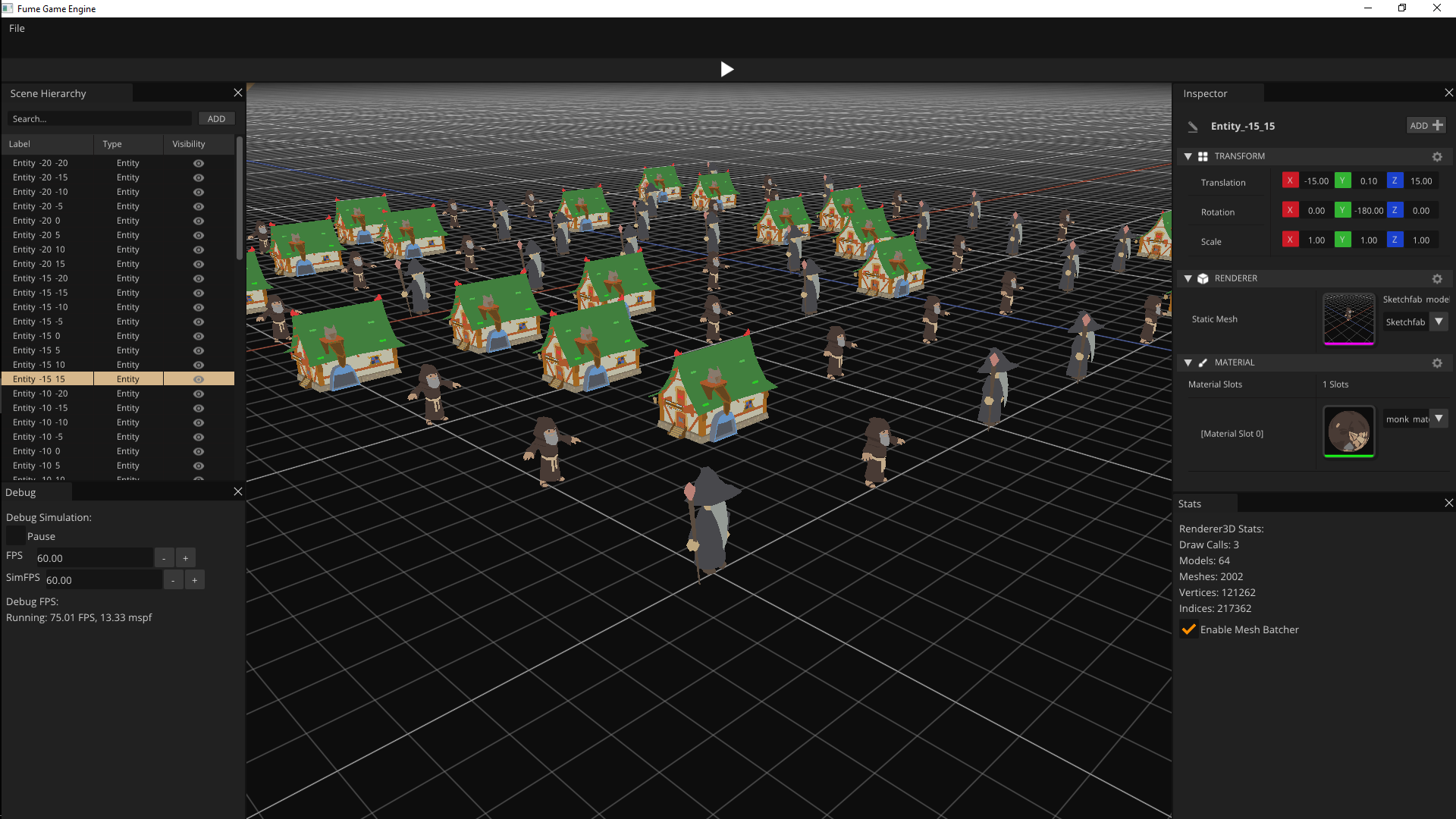

Below, we can see a bit of my custom ECS in action playing with the entities' data decoupled into several types of components that we can see in the implemented editor UI Panels.

Scene Controller

The scene controller is a dedicated class that manages the execution of scriptable game systems. It serves as a centralized control hub managing the lifecycle of the systems in a centralized manner by invoking the initialization methods, updating each frame, and handling the closure.

This could be considered a sort of Template Method Pattern class due to its ability to streamline modular control flow, promote code reuse, and facilitate system extensibility.

Scene Modes (Editor / Runtime)

Discussing the reuse of control flow systems, we leverage the controller to have two scene view modes.

-

Editor Mode: On one hand, we have the Editor Mode, which manages the lifecycle of systems that always run regardless of the framerate. -

Runtime Mode: On the other hand, we have the Runtime Mode, which represents the state where the game or application is actually executed and played.

void Scene::OnUpdateRuntime(const double dt, const RuntimeCamera& Camera)

{

AISystem.OnUpdate(EM, dt);

PhysicsSystem.OnUpdate(EM, dt);

CameraSystem.OnUpdate(EM, Camera);

RenderSystem.OnUpdate(EM, Camera);

InputSystem.OnUpdate(EM);

}

void Scene::OnUpdateEditor(const EditorCamera& Camera)

{

RenderSystem.OnUpdate(EM, Camera);

InputSystem.OnUpdate(EM);

}

In Editor Mode, we just need to update the engine-wise relevant systems regardless of the frame rate, which at the moment are the RenderSystem to draw stuff and InputSystem to processing inputs.

In Runtime Mode, we use all our scriptable game systems that we designed to process and manipulate each one of the component type that are queried.

In the example below, we can see how AISystem is working amongst PhysicsSystem and InputSystem as we are moving our Player Entity.

Carlos Peña

Carlos Peña